For the scientific method to run smoothly, reproducibility is imperative, as it provides the validity and trust associated with the realm of science. To ensure the integrity of a specific peer-reviewed paper, or journal, others must be able to repeat the same experiments using the same metrics and derive the same result.

Image Credit: Gorodenkoff/Shutterstock.com

Lack of Replication and Reanalysis in Laboratories

Replication is repeating the steps that the original researcher took verbatim to see if reaching the same conclusion can be achieved. In contrast, reanalysis is the art of accruing original data and running it through alternative modes of statistics and contemplation to see whether the same result is obtained.

The duality between these two assays helps progress scientific papers. The rate at which the same result is obtained speaks on behalf of the paper's "reproducibility". With that being said, the scientific front is lacking in both replication and reanalysis.

Whenever the topic is broached, a common example that is often brought up is the "Amgen Cancer Findings Paper", explaining how the firm attempted to replicate 53 landmark studies on cancer, only to find that six papers (11%) were reproducible, and therefore, true in their scientific findings. All other papers had yet to receive the stamp of support from the scientific community.

Reproducibility Versus Replicability

Though they are often used interchangeably amongst aspiring researchers, reproducibility is distinctly different from replicability, though both hold prominence in the field of analytics. They are how we correct and finely tune the scientific modus. Reproducibility is a measure of how similar data sets are after applying the same analytical method, code, or other means of interpretation, though the initial data is still the same.

Replicability, however, relates to different studies. It measures how consistent one study is with prior iterations of that study. To ameliorate the lack of reproducibility versus replicability in the lab, a set of guidelines have been highlighted by a consensus report of "The National Academics of Sciences, Engineering, and Medicine." They report that practicing:

- Strengthening of research practices, broad efforts, and responsibilities

- Education and training

- Improving the knowledge and use of statistical significance testing

- Improved record-keeping

- Archival Repositories and Open Data Platforms

- Code hosting and collaboration platforms

- Journal requirements, badges, and awards

Will incentivize and refine the fields of reproducibility versus replicability.

How Reproducibility and Repeatability are Achieved through Precision, Standard Deviation, and Absolute Standard Deviation

When comparing laboratory results, using the same modus and assaying techniques, an appropriate level of reproducibility is achieved. Validation is often at the forefront of the principal investigator's mind. This can only be accomplished when results are checked through multiple laboratories while considering the limitations and disabilities of their own lab.

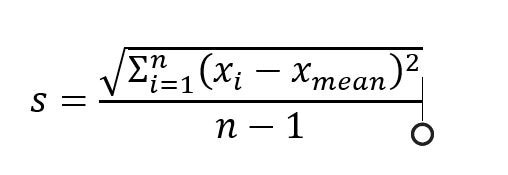

The question now becomes, "what calculations can be employed to express reproducibility in numerical figures." This is often done using standard deviation, a measure of a certain experiment's precision. The term "precision" relates to the random error in a sample and how frequently we will achieve the same result. To calculate precision within an array of experimental measurements, we must first deduce the mean and the number of trials:

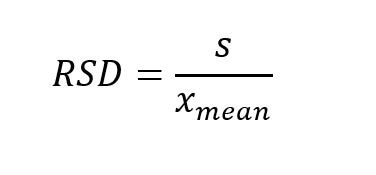

The formula above will express the absolute standard deviation for a series of measurements. However, this account of absolute standard deviation is better used for comparing the precisions from different sample concentrations, as it measures the distances between each value of a dataset. To compare the variations of multiple data sets within a lab, the relative standard deviation should be employed:

This is not to be confused with intermediate precision, which will account for the omnipresent variations within a given lab, be it through different equipment, a switching of who measures what, or a change in timeline.

To accurately apply these terms and practically account for the repeatability in lab, one must compare the standard deviation of repeatability with the standard deviation of intermediate precision. If Srepeatability < Sintermediate precision, then the experiment holds some measure of validity. If the converse is true, then small flaws exist in the validation. This could be an indicator of too few measurements, too short a time interval between measurements, or another.

Prominence of Reproducibility as a Function of the Scientific Method

This era of science we find ourselves in is unlike any other in history in the sense that we have large slews of data at our disposal. Complementing this is the cutting-edge computing power that dwarfs that of all other decades, with Moore's law still in effect. Finally, mirroring this progress is the broad instrumentation that goes into statistical analysis, used in big data, the internet of things, etc.

With all these dynamic processes occurring in tandem, we must also address reproducibility issues if we are to grow science as we know it. With reproducibility tied to intrinsic biases, the production of reliable knowledge, and data accuracy, investigators, policymakers, and funders strive to ensure a positive dialogue about these issues. In this way, we can increase the transparency and reliability of science.

Sources:

- Begley, C., Ellis, L. Raise standards for preclinical cancer research. Nature 483, 531–533 (2012). https://doi.org/10.1038/483531a

- National Academies of Sciences, Engineering, and Medicine. 2019. Reproducibility and Replicability in Science. Washington, DC: The National Academies Press. https://doi.org/10.17226/25303.

- Plesser H. E. (2018). Reproducibility vs. Replicability: A Brief History of a Confused Terminology. Frontiers in neuroinformatics, 11, 76. https://doi.org/10.3389/fninf.2017.00076

- Hanisch, R. J., Gilmore, I. S., & Plant, A. L. (2019). Improving Reproducibility in Research: The Role of Measurement Science. Journal of research of the National Institute of Standards and Technology, 124, 1–13. https://doi.org/10.6028/jres.124.024

- Samuel, S., & König-Ries, B. (2021). Understanding experiments and research practices for reproducibility: an exploratory study. PeerJ, 9, e11140. https://doi.org/10.7717/peerj.11140

Further Reading

Last Updated: Jun 30, 2022